Building my own NAS

FreeNAS / TrueNAS based x86 server

Project published on March 01, 2018.Last updated on August 31, 2023.

With growing storage needs I sooner or later had to build a proper NAS. Before that, I was using mutliple 4TB disks in my desktop to store stuff like music, movie, photo and tv-series collections, backed-up to a wild assortment of plain 3.5" HDDs put into a USB-SATA bay.

To have a centralised place for all these files, as well as for running server software like a media library with live transcoding, I decided to go with FreeNAS / TrueNAS, based on FreeBSD.

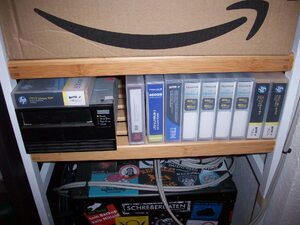

This is what the result looks like, currently living on a shelf in my storage room.

And these are the parts I went with.

| Part | Description | Cost |

|---|---|---|

| HDD | 5x 10TB Seagate IronWolf | 1399.75€ |

|

|

|

|

| CPU | Intel Celeron G3900 (2.8GHz, 2 cores) | 35.99€ |

| MoBo | ASRock C236 WSI | 192.49€ |

| RAM | Kingston ValueRAM (2x16GB, DDR4, ECC) | 334.75€ |

| SSD | Kingston A400 SSD (120GB) | 36.19€ |

| SATA | 5x SATA Cable 70cm | 19.50€ |

| Case | Lian Li PC TU 200 (used) | 150.00€ |

| PSU | Corsair SF450 (used) | 0.00€ |

| Cooler | ProlimaTech Samuel 17 (used) | 0.00€ |

| Tape | LTO-3 tape drive (used) | 0.00€ |

| UPS | APC Back-UPS BX700U | 77.99€ |

| Fan | 140mm be quiet! Silent Wings 3 PWM | 19.26€ |

| SCSI | LSI20320IE SCSI HBA | 19.00€ |

| Sum | 2284.92€ |

Luckily I was able to buy the case, power supply, CPU cooler and tape drive as a used set from a friend of mine for a good price. To be honest, because of the issues mentioned below, I haven't yet gotten around to installing the cooler I got from him. Currently I'm still using the stock Intel cooler that was included with the Celeron.

Just for completeness sake I also got an uninterruptible power supply for the NAS. Of course I connected them via USB and run a daemon that shuts down the server when the UPS battery reaches a critical state. I also connected an old VGA LCD to the NAS and the UPS, as well as USB keyboard, to be able to interact with the server if needed.

CPU

As you can see from the parts list, there was some trouble with the CPU I selected initially. This was caused by a misunderstanding of mine in regards to the mainboard. Turns out the C236 can not boot without a graphics adapter available, probably because of the UEFI. It has an option available to configure the UEFI via a serial port, but this still requires some kind of graphics output to work. The Intel Xeon I had did not have any kind of integrated graphics, so the system simply was not booting at all.

To get going, I then bought the cheapest option I could find that would fit the requirements, a Celeron G3900 for only 35€. This one has Intel HD Graphics 510 included and still supports ECC memory. I sold the Xeon on eBay to someone in Italy without significant loss. I initially went with the beefier Xeon for running VMs and transcoding media streams, but it turns out the Celeron seems to be more than powerful enough for my needs, at least for now.

ZFS

To have a "proper" NAS I of course decided to go with ECC RAM to avoid any data corruption in RAM. To at least somewhat match the size of my array, I went with 32GB.

With multi-TB drives in use currently, considering their mean time between bit failures, a higher level of redundancy is really required. Otherwise, when a drive dies and all data needs to be re-read when resilvering the RAID array, the probability of hitting another failure is relatively high. For this reason I decided to go with RaidZ-2, so two drives for redundancy, allowing two drives to die and the data still be readable.

Looking at my storage requirements at the time of starting this project and considering some healthy rate of growth, I decided to go with five 10TB disks, resulting in 50TB raw space and about 30TB of usable space after redundancy.

In June 2022, about four years after starting this project, I've now used up 16TB, so I think this was a good decision compared to 5x8TB or even 5x4TB.

Case

The Lian-Li PC TU 200 case I selected only fits 4 hard disks by default. But thanks to its modular nature, I was easily able to design a 3D printed mount for a fifth disk.

One thing I'm not quite happy with is the airflow inside the case. The front fits a 140mm fan that directly hits the disks. Nonetheless the temperature of the disks is just below 50°C, which is a bit high for my liking. Sometime in the future, I want to replace the case with a full-size ATX one with easier accessible drive bays and more space for fans and cable routing.

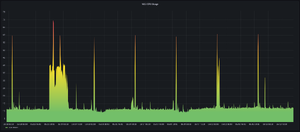

The screenshots above also show the average CPU usage, which shows the Celeron is plenty enough for now. The power usage is reported by the UPS via USB. Blank spots in the third graph are caused by the data-polling daemon running on my desktop PC, which is turned off at night. The spike at the end of the month in all three graphs is caused by the monthly ZFS scrub. To collect this data I'm using InfluxDB and Telegraf.

Tape Backup

As you can see from the parts list above, I also got an LTO-3 tape drive with the case I bought used. I kinda got into this idea thanks to my friend Philipp, who also uses a tape drive like this to backup his NAS. He even put the scripts he wrote for that on GitHub.

I have to admit however that I still haven't gotten around to actually implementing that. The drive is connected using a PCIexpress SCSI HBA and is properly recognized by the OS, but I haven't used it otherwise.

Software

Installing and configuring TrueNAS (still called FreeNAS at the time I was setting it up) is pretty straight-forward. Download the ISO, take a close look at the official documentation and follow it!

Besides a Debian VM configured for my IoT needs using my Ansible scripts, I run a Rancher instance for Docker containers, which currently only runs a PiHole productively, besides being used for some experiments here and there. I'm also using the great TrueNAS plugin system to run a local Plex and Nextcloud, although I'm not quite satisfied with these parts of my setup. But they do the job for now.

Link Aggregation Experiments (January 2020)

Both the C236 as well as my desktop PC have two 1Gbit/s Ethernet NICs. So I decided to get a Netgear GS308T Managed Switch and attempt to use link aggregation to achieve faster connection speeds and actually be able to saturate my RAID.

Unfortunately I haven't been able to get it to work at all. I configured both the FreeBSD in TrueNAS as well as my Arch Linux desktop as described on the net, but the result was my whole home network completely breaking down in strange ways. WiFi devices could no longer connect outside through the router, even though the router wasn't involved at all in the aggregate links.

In the end I have to admit I've given up. To be honest, I don't really need the faster speeds. But if anyone has suggestions, I'm open to try it again!

Four-Year Update (June 2022)

Recently I was greeted by this log message in the TrueNAS web UI.

Device: /dev/ada5, 584 Currently unreadable (pending) sectors

Device: /dev/ada5, 584 Offline uncorrectable sectors

Taking a look at the ZFS pool status, some read errors cropped up on two (!) drives already. Guess I should have made sure not to get drives from a single batch 🤷

Unfortunately hard disk prices are still high and I'm currently not able to afford two new drives. So for now I'm making sure I have a working backup and hope for the best 😅

UPS Battery Dead (May 2023)

To operate the server more safely I bought an APC Back-UPS 700 back when I built it. Sometime in 2022 I started getting alerts from the APC daemon that the battery needs to be replaced. So this was after just four years, with the UPS actually running from the battery once for less than two hours.

As I was getting quite used to ignoring any warnings popping up, I did the same here 😔

Now yesterday there was a planned electricity outage at my flat. When power returned, the UPS did not come back online again.

The outlet that's only surge protected was still working, but the main battery-backed outlets did not work, and the UPS itself did not turn on.

So I ordered a replacement battery 🔋 and also two new drives. 🖴

Getting out the old battery 🪫 took a lot of convincing.

Turns out the battery was very swollen and actually ruptured in multiple places. Some graphite-looking pieces fell out.

So please replace your UPS batteries more often! ⚠️ I don't think the charging cuircitry in the UPS is taking good care of healthy battery management...

Installing the new battery was quick and simple. The UPS immediately started working again, as I had hoped.

Failed Disk Replacement (August 2023)

So after the first read errors appeared on two drives over a year ago, one of them finally failed enough for the ZFS pool to show a degraded status.

This was disk 5, the bottom most one in the 3D-printed mount. The server already runs hot, with the two drives with read errors reporting the highest temperatures. And I suspect my mount doesn't help either.

Nonetheless I simply replaced the disk.

The filesystem resilvered without any issues and is now running fine again, though I suspect disk 3 will probably face a similar fate soon.